Identify, in a continuous way, your web attack surface exposed on the Internet, using Open-Source Software

Glossary

📖 This section contains the collection of terms used in this post:

- Attack surface: The set of points on the boundary of a system, a system element, or an environment where an attacker can try to enter, cause an effect on, or extract data from it (source).

- Attack vector: An attack vector, or threat vector, is a way for attackers to enter a network or system (source).

- Shadow IT: It is the usage of IT-related hardware or software by a department or individual without the knowledge of the IT or security group within the organization (source).

- Reconnaissance: Techniques and methodology necessary to gather information about your target system secretly (source).

- Red Team exercises: An exercise, reflecting real-world conditions, that is conducted as a simulated adversarial attempt to compromise organizational missions and/or business processes to provide a comprehensive assessment of the security capability of the information system and organization (source).

- Domain name: A label that identifies a network domain using the Domain Naming System (source).

- Certificate transparency: A framework for publicly logging the existence of Transport Layer Security (TLS) certificates as they are issued or observed in a manner that allows anyone to audit CA activity and notice the issuance of suspect certificates as well as to audit the certificate logs themselves (source).

- External Attack Surface Management: External attack surface management (EASM) helps organizations identify and manage risks associated with Internet-facing assets and systems. The goal is to uncover threats that are difficult to detect, such as shadow IT systems, so you can better understand your organization’s true external attack surface (source).

- Content Management System: A content management system (CMS) is computer software used to manage the creation and modification of digital content (source).

- Network ACL: A network access control list (ACL) is made up of rules that either allow access to a computer environment or deny it. In a way, an ACL is like a guest list at an exclusive club (source).

- Vulnerability scanner: A tool (hardware and/or software) used to identify hosts/host attributes and associated vulnerabilities (source).

- Configuration management database: Also called CMDB, it is a file that clarifies the relationships between the hardware, software, and networks used by an IT organization (source).

Context

🌏 Since few years, it has become easy to deploy new services to handle a new business opportunity. Popular cloud providers provide cheap and scalable services to quickly deploy a web-based application. The direct consequence is that, today, every company exposes more and more services on the Internet. From a business perspective, cloud-based services are a great lever to transform an opportunity or to quickly deploy a new service to consumers (client or partners). Shadow IT activities were also boosted by such context.

🤔 From a security perspective, it has led to a loss on the view of the attack surface exposed by the company. Indeed, all cloud providers propose features to secure and monitor the services exposed…But it assumes that the company is aware of the existence of the service and on which cloud provider is deployed.

Objective of the post

🎯 This post proposes an idea, alongside a technical proof of concept, to identify assets (an assets here is a web-based service) exposed on the Internet to build an attack surface inventory, all of this, in a continuous, maintainable, and, as much as possible, automated way.

💡 The idea proposed was using only open-source tools and sources of information. The goal was to allow a company to get started in taking back control over its’ exposed attack surface.

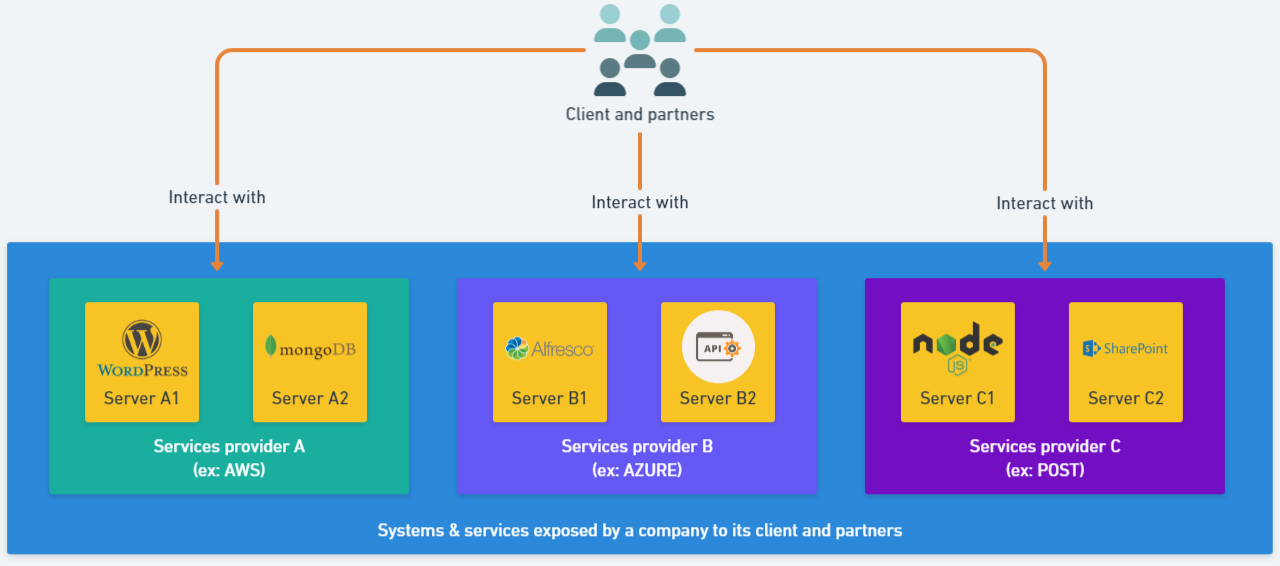

💬 Note that such activity is also called the identification of the “External Attack Surface”. Below is a visual representation of an attack surface exposed by a company:

Overview of the idea

📋 The proposed idea was based on the following elements:

- Use public sources of information.

- Use the reconnaissance techniques used by attackers.

- Use the same open source and free set of tools as the one used by attackers.

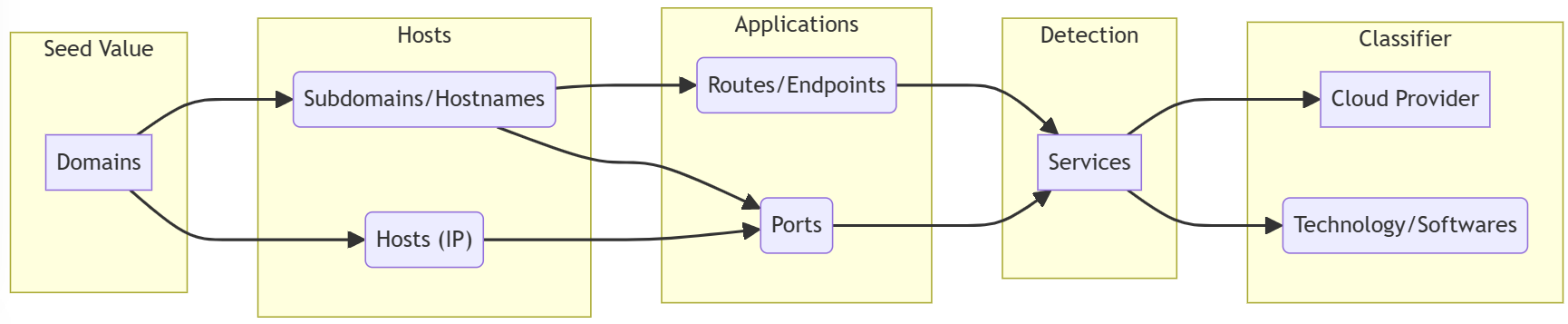

⚒ The elements above were used to create an Assets Collection Pipeline (called ACP from now on) flow like the following (read from left to right):

🤝 This flow was adapted from the initial concept, created by Moses Frost, for its Red Team exercises.

💻 The flow above was taking a company base domain name as the input data named seed, and once executed, the following information was gathered:

- Domains & subdomains used or owned.

- Cloud providers used.

- Services exposed.

🔬 Such information was used to:

- Build a real, and up-to-date, inventory of the services exposed to the Internet.

- Validate unexpected services identified.

- Feed the next run.

Source of information and tools

📡 The ACP used the following public source of information:

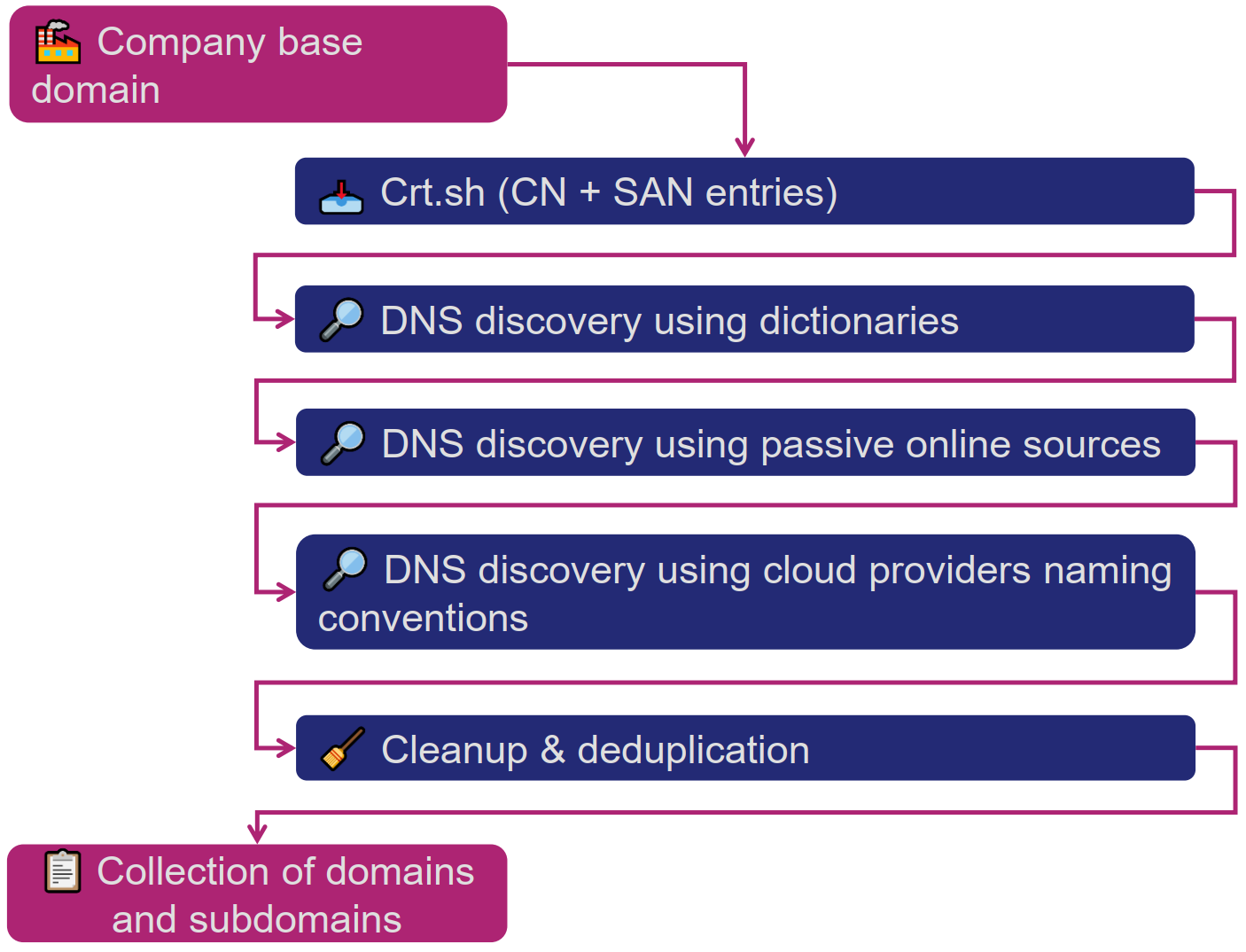

- Certificate transparency logs for the domains and subdomains related to the main company’s domain name via the https://crt.sh data provider.

- Passive online sources like the following:

- Set of dictionaries of common subdomain names for the discovery of new subdomains provided by the SecLists project.

- Cloud providers services naming conventions like one provided by NetSPI for Azure services.

- Your current inventory and custom dictionaries based on your naming convention.

⚒ The following, free and open-source tools, were used to leverage the data gathered and reach the objective of the ACP:

- Curl: To perform raw HTTP requests.

- Nmap: To discover open ports.

- JQ: To process JSON data.

- Dnsx & SubFinder: To discover new subdomains.

- Nuclei: To discover exposed services on domains & subdomains.

- System scripting and Python for the logic.

Proof of concept

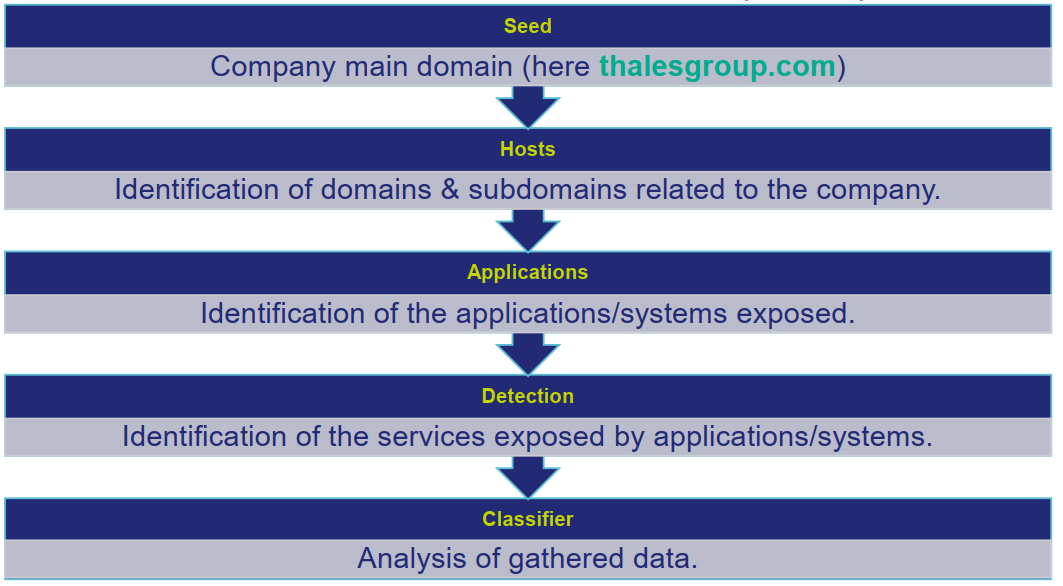

👨💻 To validate the proposed idea, a proof of concept of an ACP was created (named MyACP) using the base domain name of Thales as a seed à thalesgroup.com

⚒ My ACP was the following:

🏭 Important point to keep in mind: Creating an effective ACP is an incremental process, missed items ratio will decrease over the different iterations. Indeed, the results of an iteration of the ACP will be used to tune the ACP and feed the next iteration.

💬 CSV format was used to represent output data to have a flat format using columns allowing quick consultation in Microsoft Excel. It was a personal choice; you can use any format you want to represent the data gathered in your ACP.

MyACP: Hosts

⚒ For this step, the following flow was applied:

👨💻 The following scripts were created to perform the corresponding processing:

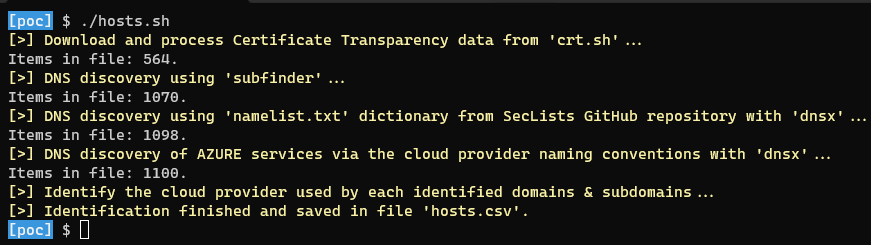

👨💻 Execution of the script “hosts.sh”, data were saved into a file named “hosts.csv”:

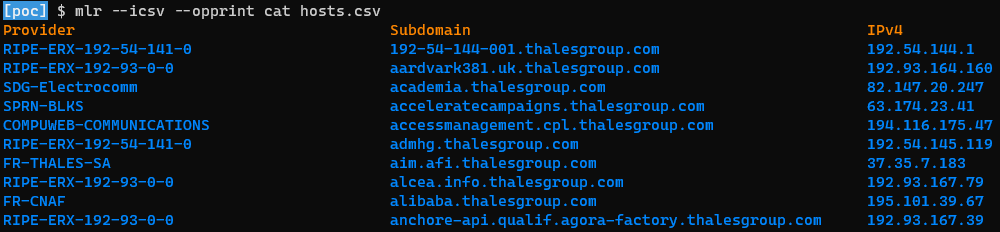

👀 Overview of the results, using miller to display CSV content:

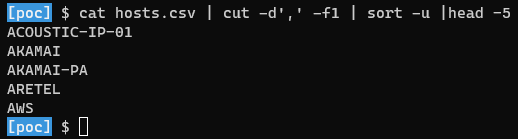

👀 Overview of the cloud providers used by the hosts identified:

🎯 So, at this point, hosts identified from the seed were gathered into the file “hosts.csv”. This file was used for the next step of my ACP.

MyACP: Applications

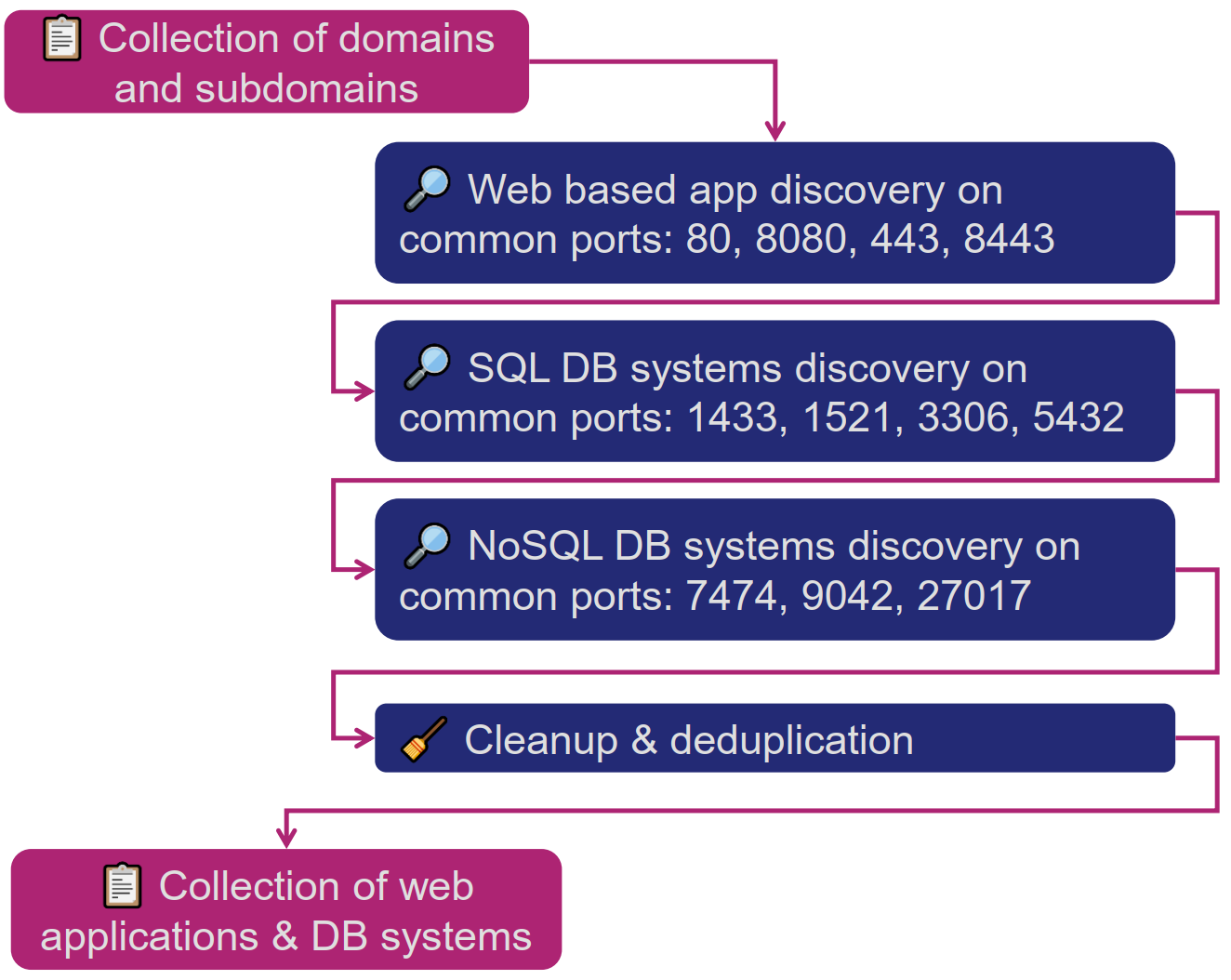

💡 For the POC, a focus was made on the web applications and the following DB SQL/NoSQL systems:

- SQL Server, Oracle DB, MySQL, PostgreSQL.

- Neo4J, Cassandra, MongoDB.

⚒ For this step, the following flow was applied against identified hosts:

👨💻 The following script was created to perform the corresponding processing:

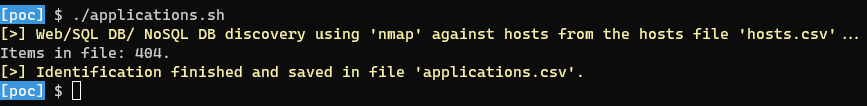

👨💻 Execution of the script “applications.sh”, data were saved into a file named “applications.csv”:

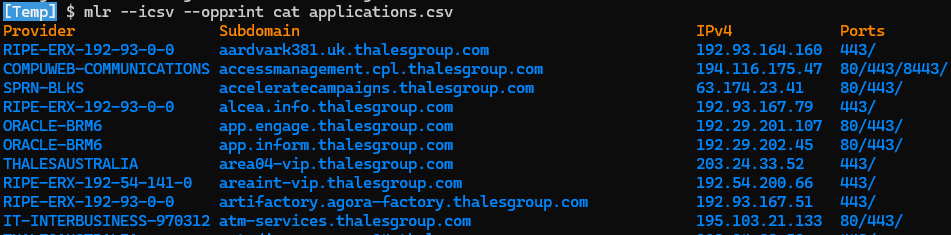

👀 Overview of the results:

🎯 So, at this point, applications (more services here) identified from the collection of hosts were gathered into the file “applications.csv”. This file was used for the next step of my ACP.

MyACP: Detection

✅ For this step, the POC was limited to the identification of any web applications (services) to not perform any illegal action or cause problems on target side. No offensive HTTP request was performed.

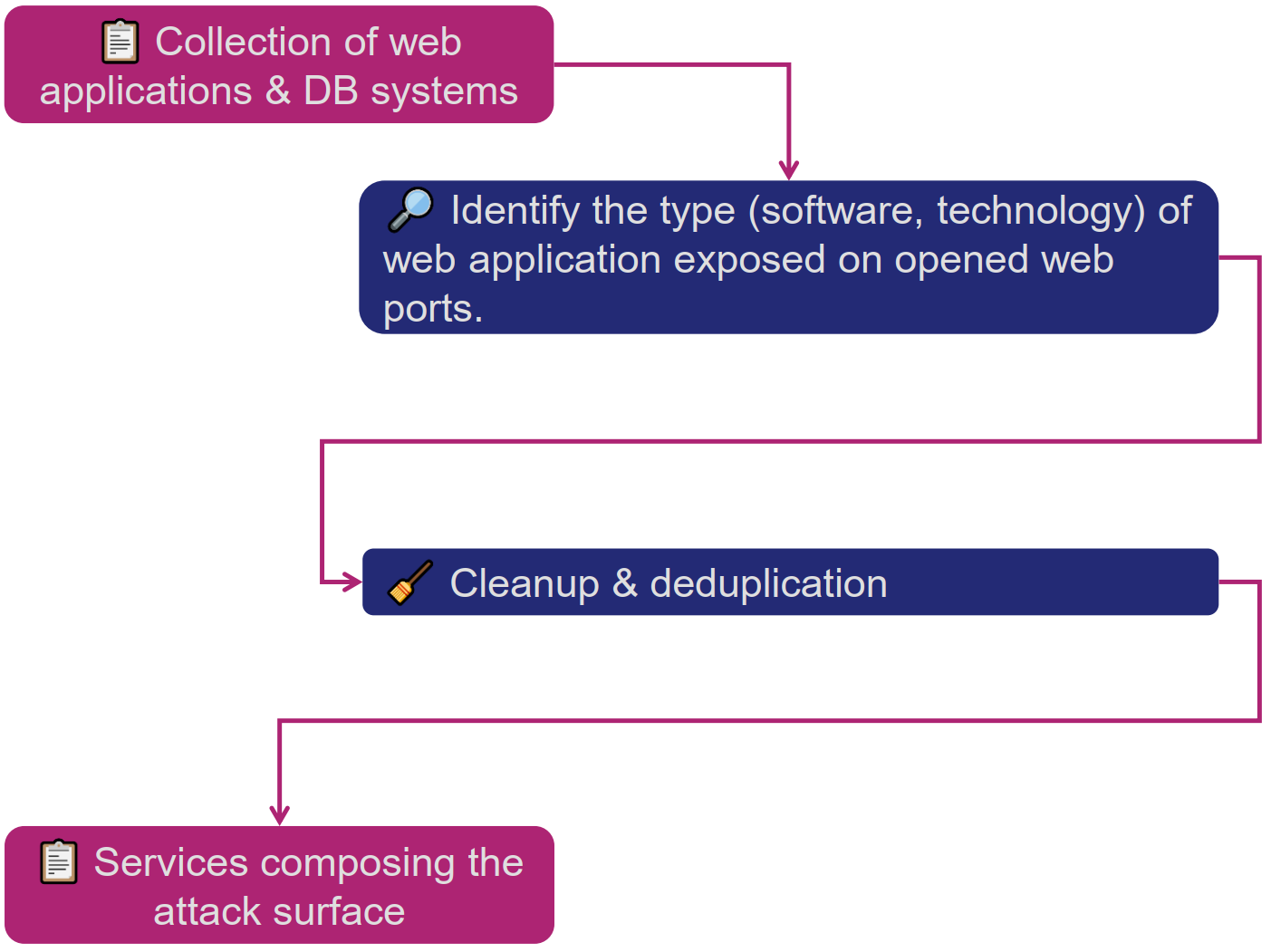

⚒ For this step, the following flow was applied against identified applications (services in terms of host: port tuple):

👨💻 The following script was created to perform the corresponding processing:

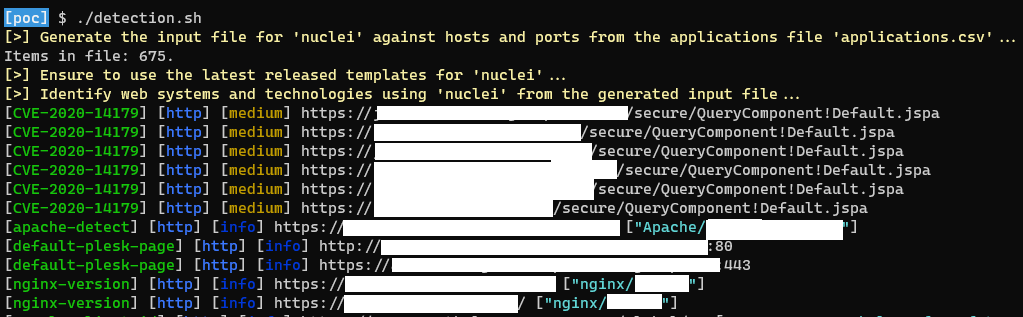

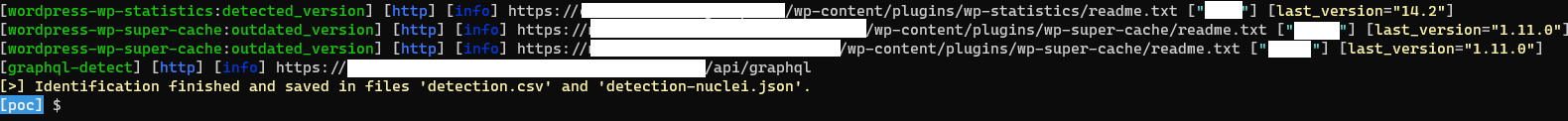

👨💻 Execution of the script “detection.sh”, data were saved into files named “detection.csv” and “detection-nuclei.json”:

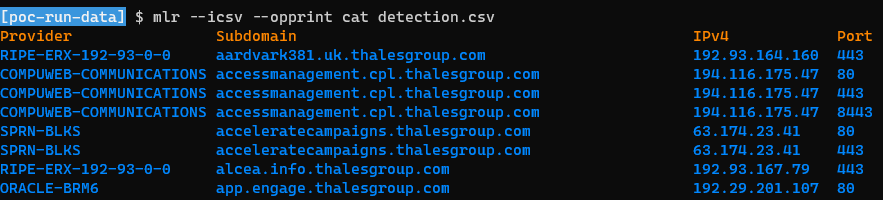

👀 Overview of the results:

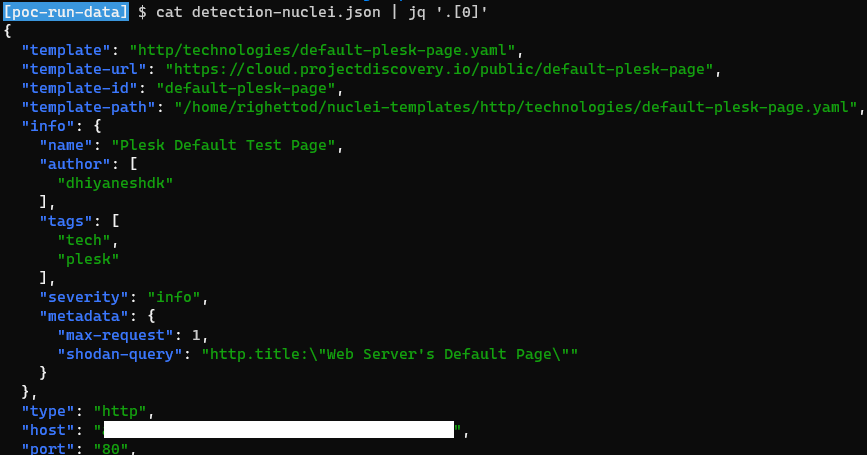

📚 The file “detection.csv” was containing all hosts for which, at least, one an active web application was identified. The “detection-nuclei.json” file was containing the technical details about each identified applications.

💬 The second file was also containing the information of the first one, but two different file were created for consistency of the processing as well as making the “classifier” step easier.

🎯 So, at this point, the following data were gathered based on the seed:

🤔 The difference between the number of entries found for the different steps was normal. Indeed, some subdomains identified were not bound to any IP address anymore and live subdomains were not intended for non-web related activities.

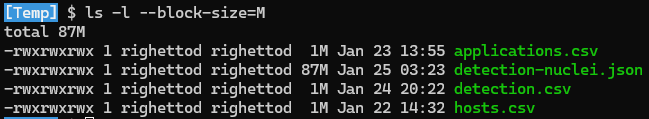

💬 JSON format was used for nuclei because it was easier for processing large and detailed data sets. Indeed, the nuclei output file was having a size of 87 MB:

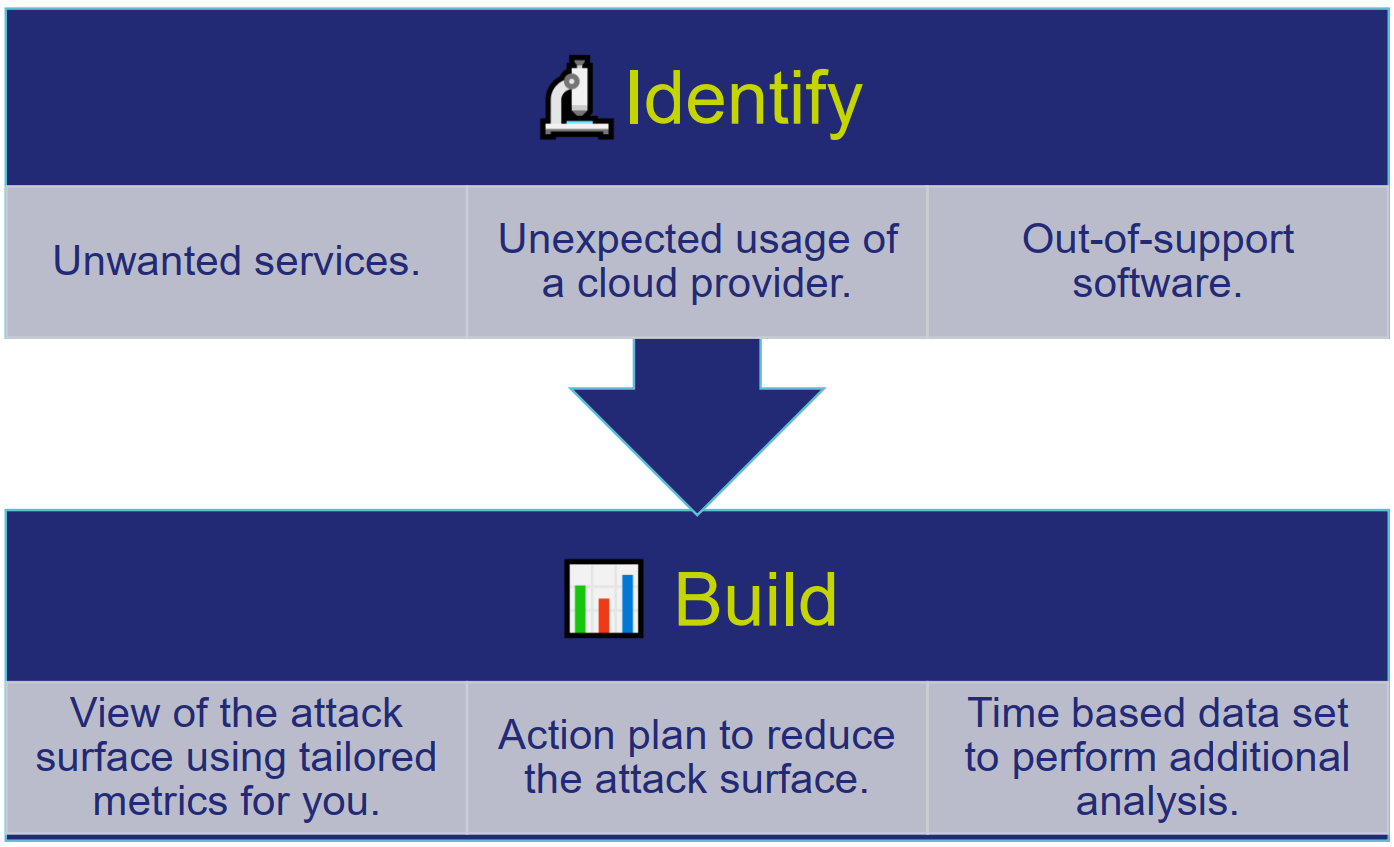

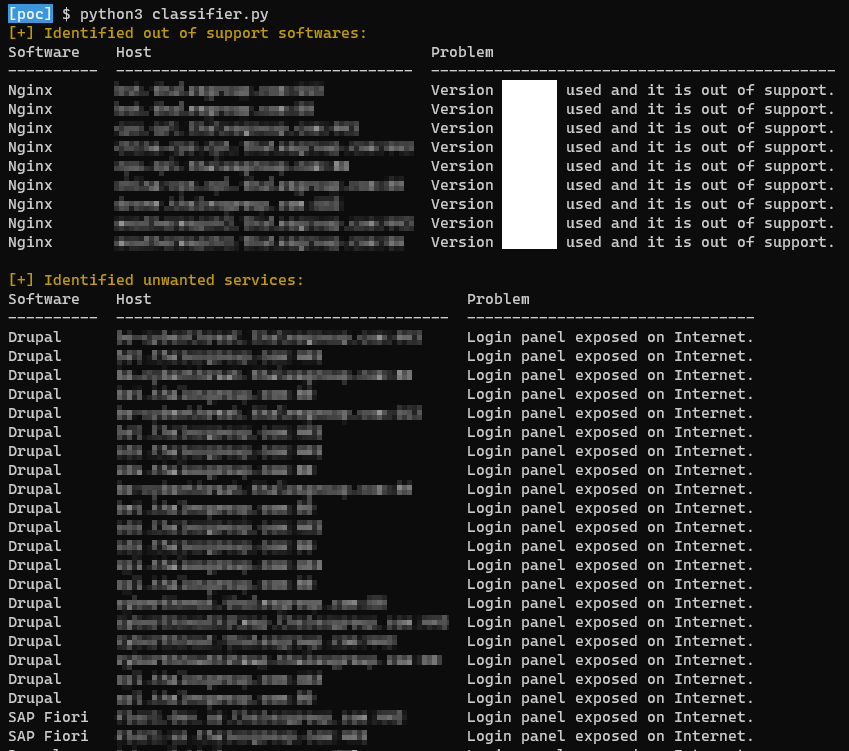

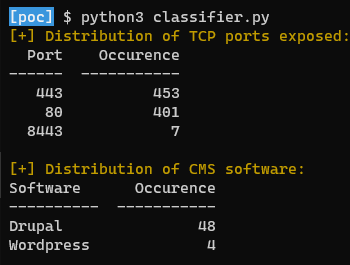

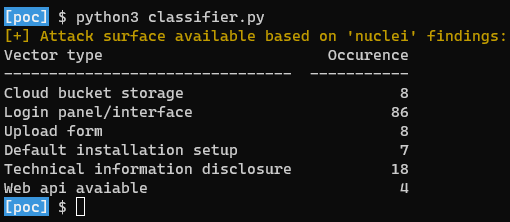

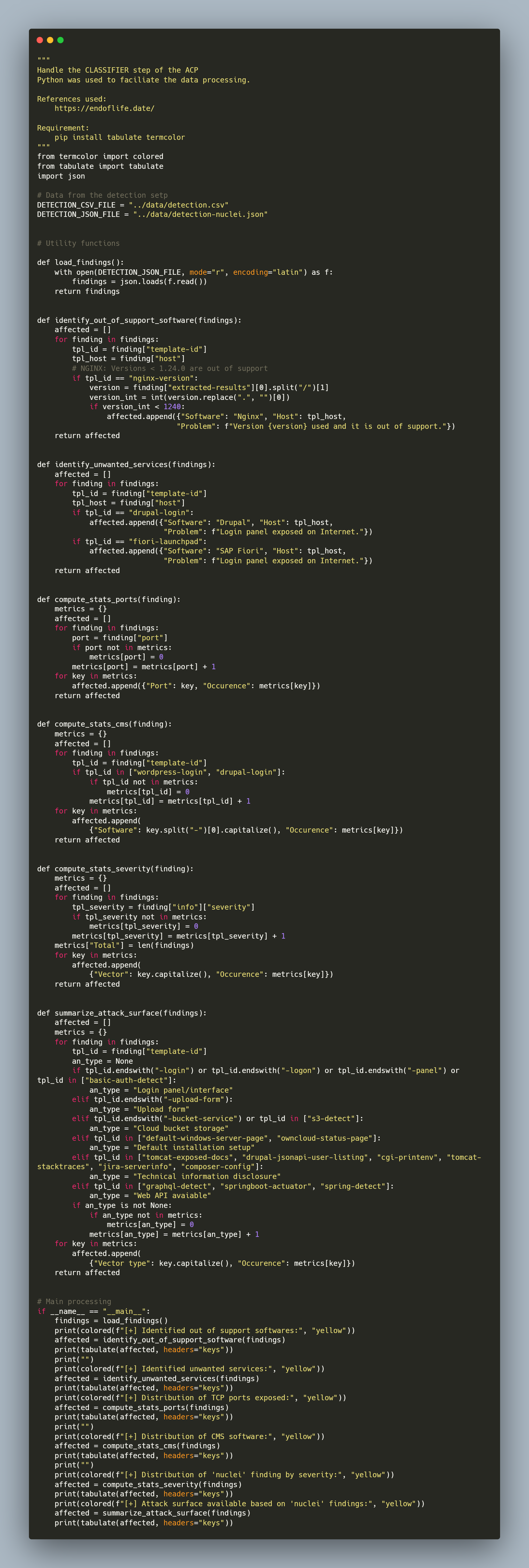

MyACP: Classifier

⚒ For this final step, the following approach was applied to process the gathered data:

👨💻 The following script was created to perform the corresponding processing. Python was used to facilitate the processing as well as the creation of selection rules for data:

👀 Out of support software as well as non-expected services exposed:

👀 Distribution of opened TCP web ports as well as CMS software used:

👀 Distribution of the attack surface identified by attack vector:

🔬 The “classifier” step is really the one in which “you are only limited by your imagination” in term of analysis and leveraging of the gathered data. Indeed, for example you can use the information to feed:

- A vulnerability scanner to identify vulnerabilities, missing security patches, etc. on assets identified.

- A configuration management database (CMDB) to update your inventory of know assets.

- An intrusion test to evaluate the security posture of a specific type of identified assets.

- A tool, like ELK, to perform statistics and charts against the data.

- A security incident to shutdown unwanted services.

- And so on…

Key takeaways

💡 Open tools and sources of information can be used to identify the attack surface exposed by your company.

⚒ The idea and materials proposed in this post can be used freely, as a bootstrap, to create your own ACP.

🏭 It is not “magic”: It requires constant work to achieve a useful level of information and identification of new exposed assets.

📅 It is important to run your ACP on a regular basis (at least once a week) to detect and be aware of new exposed assets. Ideally, use an external Internet connection to really use an external point of view and not be confused by any network ACL in place.

🔬 It is also important to review results of each run of your ACP to:

- Enhance the detection logic.

- Feed the next run with already identified assets.

Source code of scripts

💻 This section contains the source code of all scripts mentioned into this post.

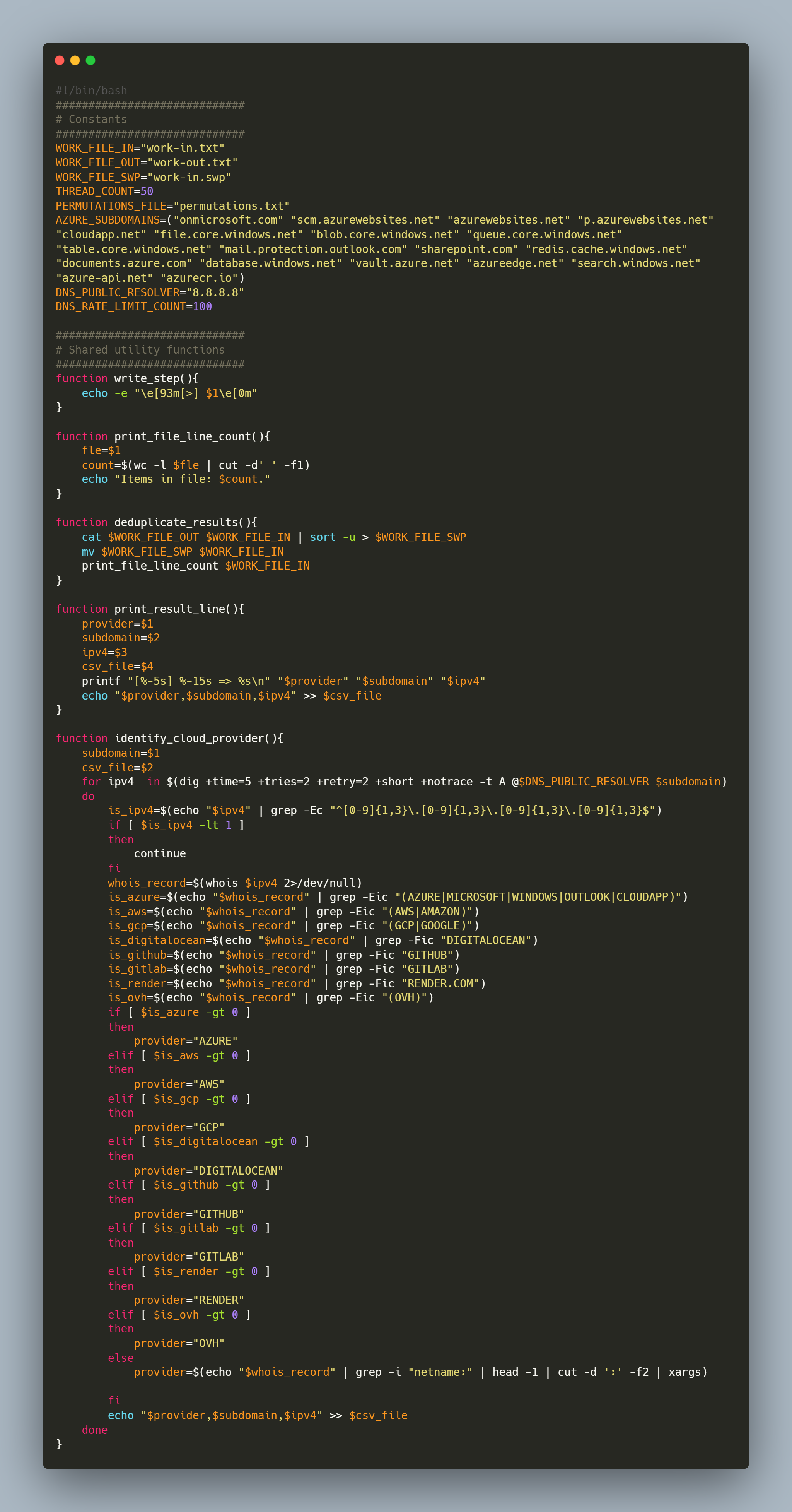

utils.sh

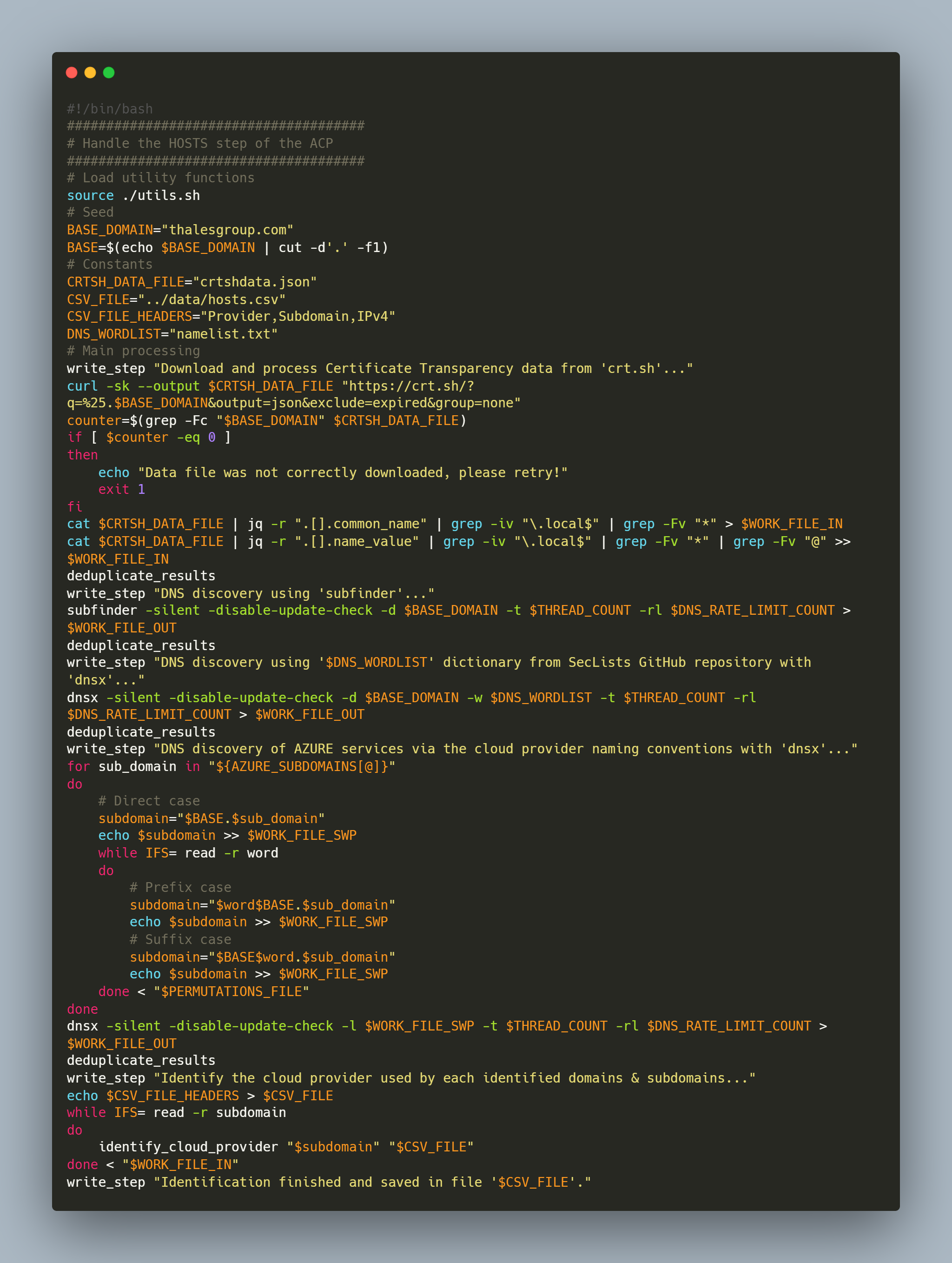

hosts.sh

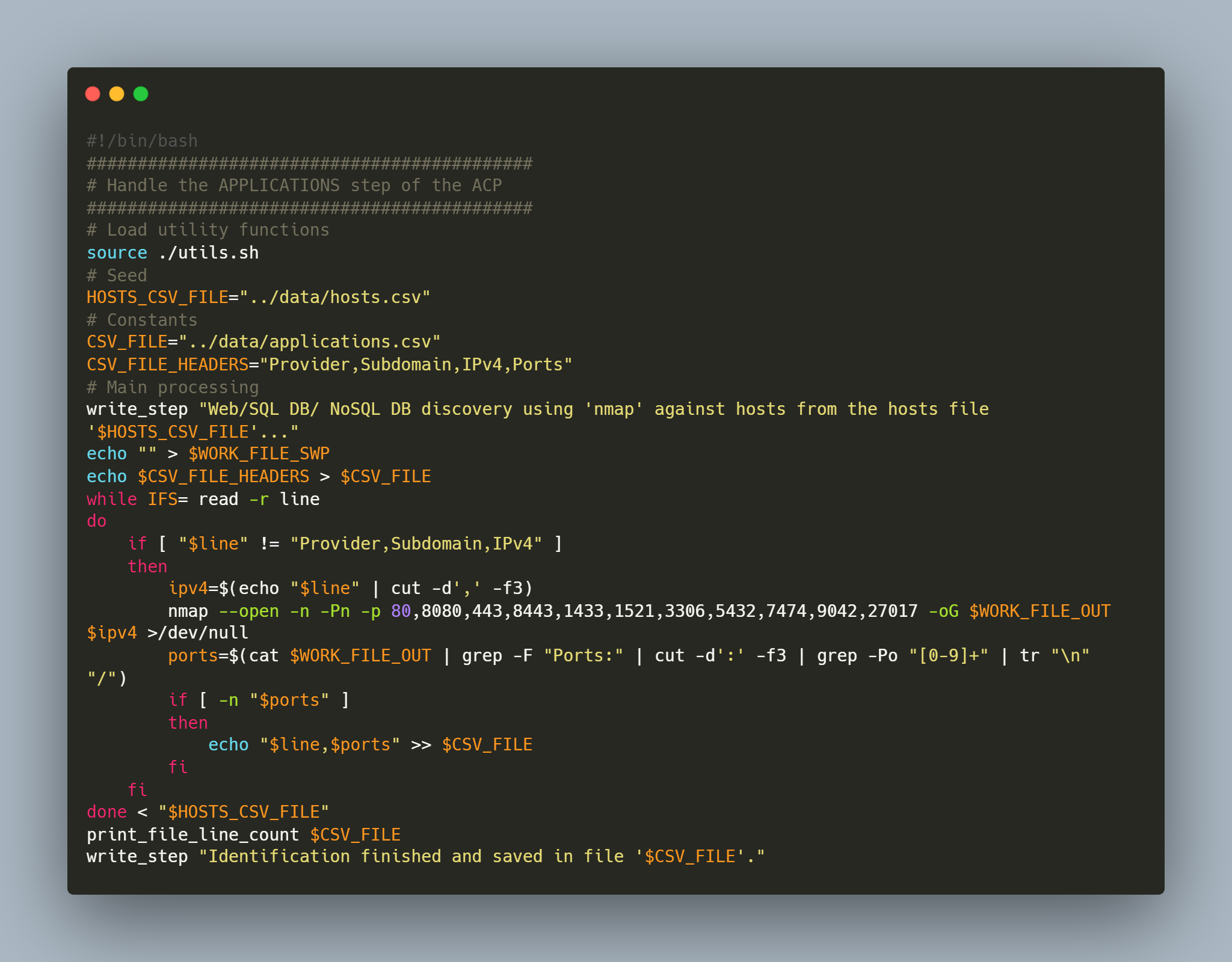

applications.sh

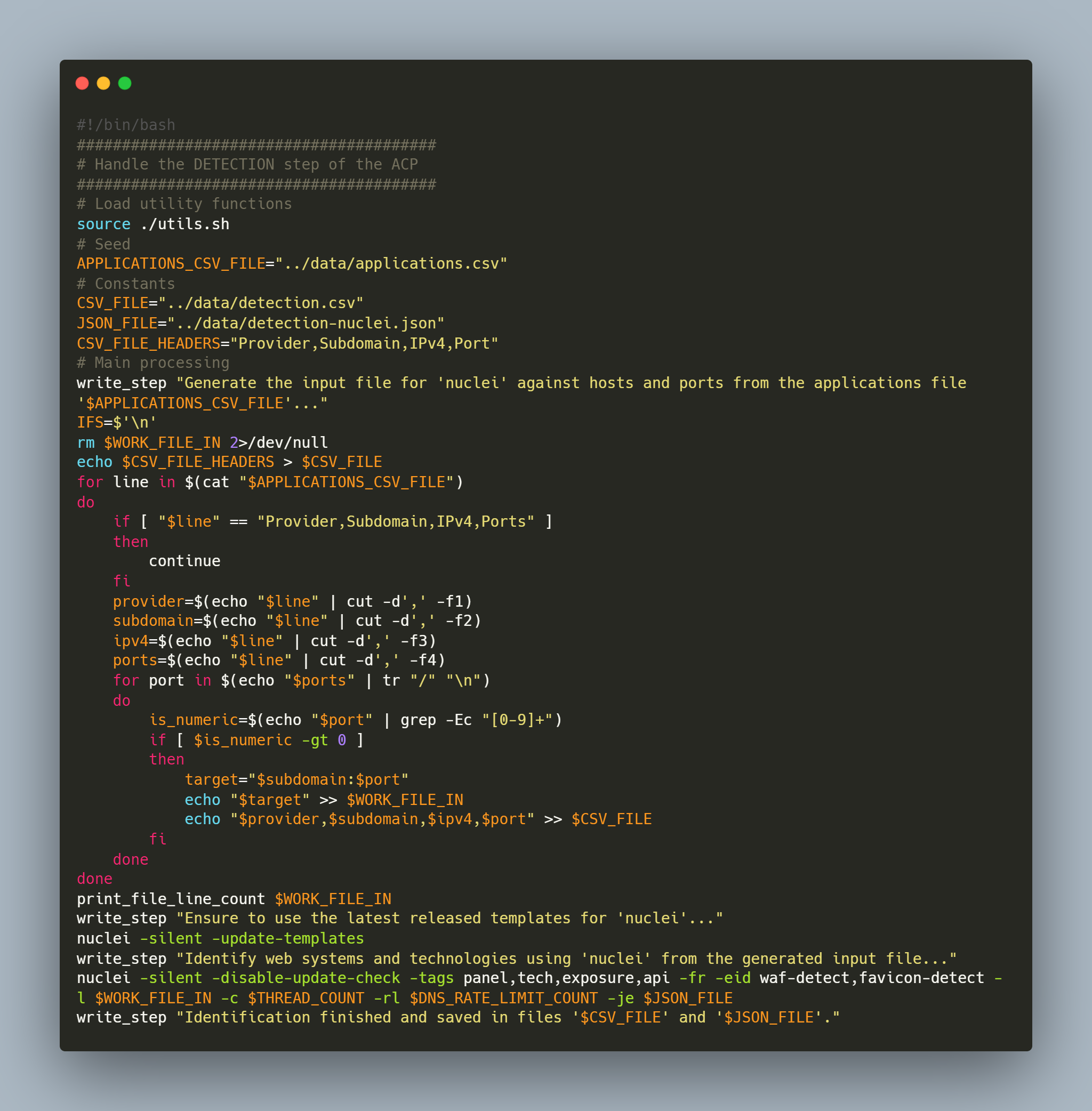

detection.sh

classifier.py

Additional resources

- Methodology for Cloud penetration testing

- Cloud Assets Discovery

- Attack Surface Discovery:

- Subdomain Scanner

- Dictionaries used by scripts: